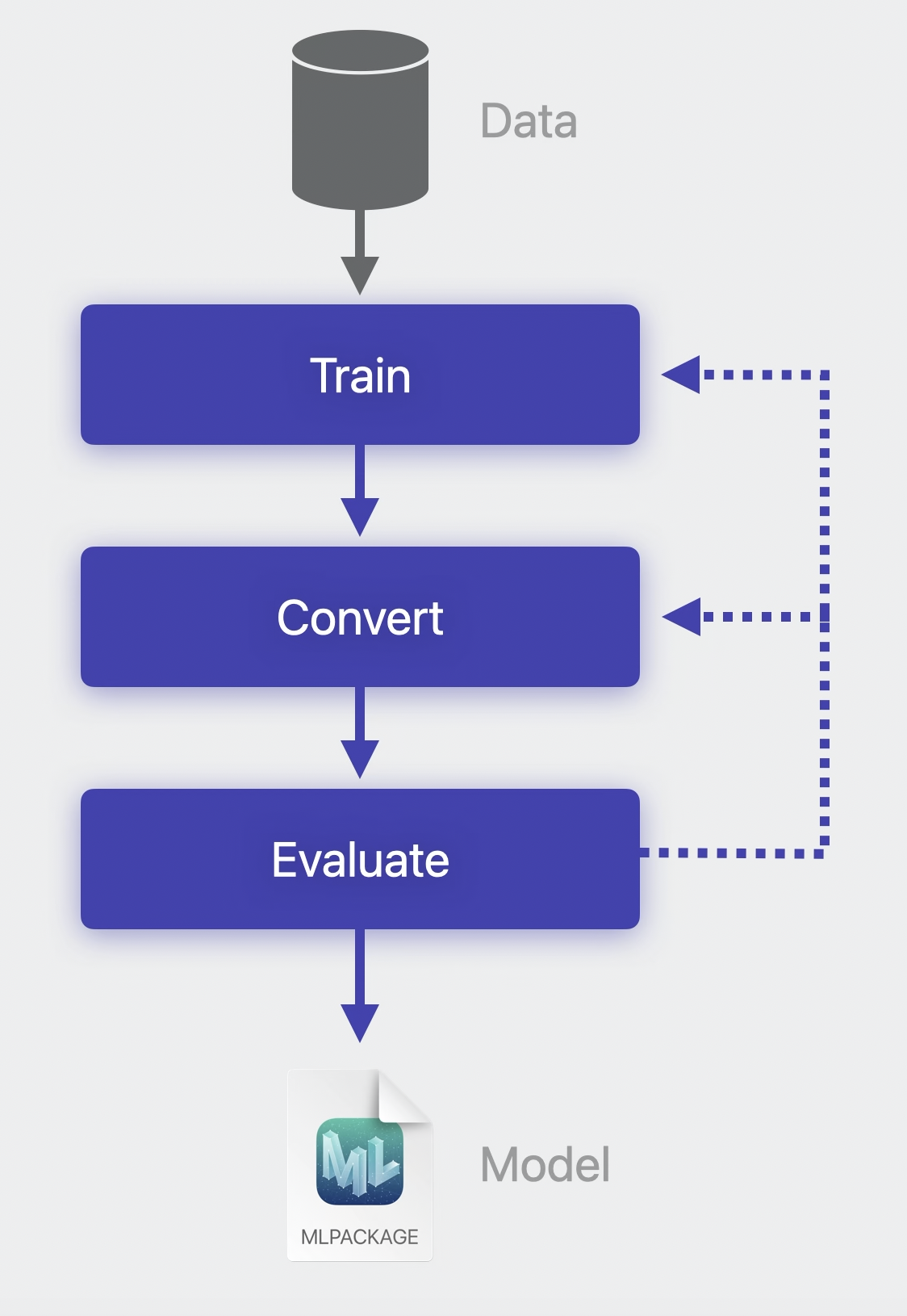

Core ML offers developers two paths for model development: using Create ML for quick model creation or employing Core ML Tools for converting models from popular machine learning frameworks like PyTorch and TensorFlow into Core ML format:

Two ways of developing models: CreateML (left) and Core ML Tools (right)

Specifically, using the Core ML models over third-party models improves on-device performance by leveraging the CPU, GPU, and Neural Engine (where applicable) while minimizing memory footprint and power consumption.

Many top apps use Core ML models, including Doordash, Zoom, TurboTax, and Instagram. Using Emerge's Size Analysis tool, we can see how Zoom uses Core ML models like "lip_sync.mlmodelc" and "flm.mlmodelc" to potentially support features like lip synchronization and facial landmark detection:

X-Ray of Zoom's Core ML models on iOS

iOS 17 has introduced faster prediction times, an API for runtime inspection of compute device availability and asynchronous prediction capabilities for Core ML. For more information, check out Apple’s developer documentation.